The AI Periodic Table – A Deep Technical Framework for Agentic AI

As Generative AI systems evolve beyond simple chat interfaces, modern software engineers are now required to reason about agents, memory, orchestration, retrieval pipelines, validation layers, and model specialization as first-class architectural concerns. What was once a monolithic “LLM application” has transformed into a distributed, multi-layered AI system.

Enterprise AI systems are growing increasingly complex. Concepts such as agents, RAG, embeddings, guardrails, vector databases, fine-tuning, and orchestration frameworks are now common in engineering discussions, yet many teams struggle to understand how these components fit together into a coherent system.

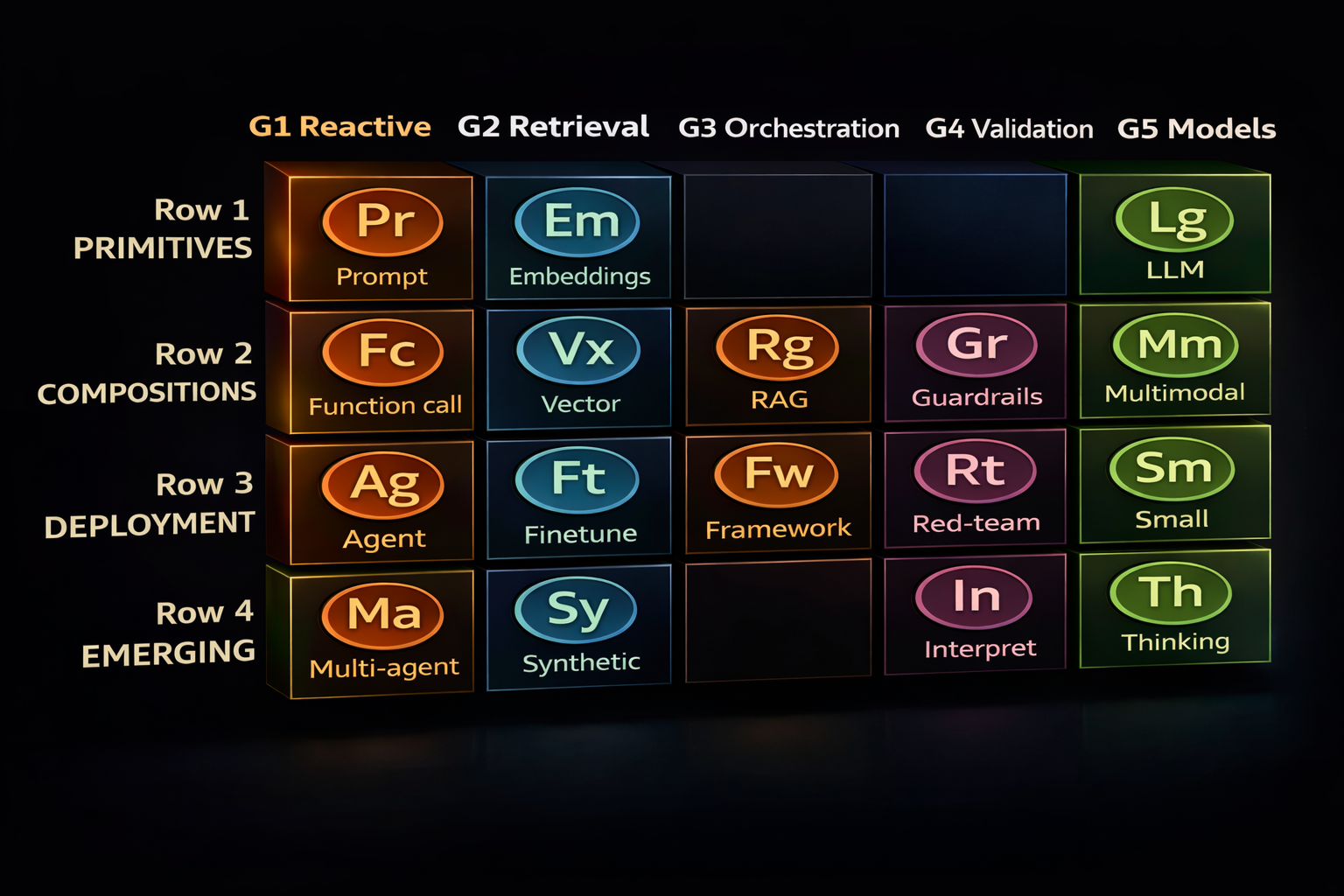

One effective way to structure this complexity is the AI Periodic Table metaphor. Similar to the chemical periodic table, it organizes AI capabilities into columns (functional families) and rows (maturity levels). This framework allows architects and engineers to decode any AI architecture by identifying which elements are present, how they interact, and what might be missing.

In this article, we walk through every element of the AI Periodic Table—such as Pr (Prompt), Em (Embeddings), Ag (Agent), Rg (RAG), and Lg (LLM)—explain their meaning and role, and show how they combine into real-world enterprise workflows, also referred to as architectural “reactions”.

Families (Columns G1–G5)

The table is organized into five column families, each grouping capabilities that behave similarly at a system level.

G1 – Reactive (Action and Control)

Reactive elements respond directly to inputs and control system behavior. They determine how an AI system reacts and what actions it takes.

- Prompting

- Function Calling

- Agents

- Multi-Agent Systems

G2 – Retrieval (Memory and Knowledge)

Retrieval elements provide memory and external knowledge. They ground models in real data and enable semantic recall.

- Embeddings

- Vector Databases

- Fine-Tuning

- Synthetic Data

G3 – Orchestration (Coordination)

Orchestration elements glue components together into workflows, controlling execution order, data flow, and coordination.

- RAG Pipelines

- Orchestration Frameworks

- Tool and Protocol Layers

G4 – Validation (Safety and Quality)

Validation elements ensure correctness, safety, and trust. They prevent failures before systems reach users.

- Guardrails

- Red Teaming

- Interpretability Tools

G5 – Models (Core Intelligence)

The Models column represents the inference engines that generate intelligence.

- Large Language Models (LLMs)

- Multimodal Models

- Small and Edge Models

- Thinking and Reasoning Models

This column-based view allows engineers to ask critical questions such as: Does the system have memory? Is it orchestrated? Is it validated? Most production systems span multiple families simultaneously.

Periods (Rows 1–4)

Rows represent increasing levels of system maturity and architectural sophistication.

- Row 1 – Primitives: Atomic building blocks, suitable for demos and experiments.

- Row 2 – Compositions: Combined primitives forming usable patterns.

- Row 3 – Deployment: Production-ready systems with reliability and governance.

- Row 4 – Emerging: Cutting-edge concepts still stabilizing.

Designers can read the table vertically to evaluate production readiness. Systems confined to Row 1 or Row 2 rarely meet enterprise requirements.

Row 1: Primitives (The Atoms)

Pr – Prompting (Reactive)

Prompts are natural-language instructions to the model, such as “Summarize this report” or “Generate a client email”. Prompts are highly sensitive—small changes can lead to large output differences. Prompt engineering is the simplest and most direct interface to LLMs.

Em – Embeddings (Retrieval)

Embeddings are numerical vectors that encode semantic meaning. They enable similarity search, clustering, and retrieval. Embeddings form the foundation of external memory by turning knowledge into searchable mathematics.

Lg – Large Language Models (Models)

LLMs are the core inference engines. They consume prompts and context to generate outputs. In system diagrams, the LLM is often central, with all other components reacting around it.

Small models (Sm) are often considered alongside LLMs, particularly for cost-sensitive, low-latency, or on-device scenarios.

Row 2: Compositions (The Molecules)

Fc – Function Calling (Reactive)

Function calling enables LLMs to invoke external tools or APIs before answering. This transforms AI from purely generative systems into actionable systems capable of interacting with real-world services.

Vx – Vector Databases (Retrieval)

Vector databases store and index embeddings at scale, enabling fast similarity search and long-term memory. Any serious RAG pipeline depends on a vector database as its knowledge store.

Rg – Retrieval-Augmented Generation (Orchestration)

RAG pipelines ground LLM outputs in external data. Queries are embedded, relevant documents are retrieved from a vector database, and the retrieved context is injected into the prompt, significantly reducing hallucinations.

Gr – Guardrails (Validation)

Guardrails enforce safety, compliance, and quality constraints at runtime. They prevent sensitive data leaks, policy violations, and off-topic responses. Guardrails act as the seatbelts of enterprise AI systems.

Other Row 2 elements may include multimodal models, prompt chaining, and structured output schemas, all of which improve reliability.

Row 3: Deployment (Production Patterns)

Ag – Agents (Reactive)

Agents introduce an iterative think–act–observe loop. Instead of single responses, agents plan, execute, call tools repeatedly, and adapt until goals are achieved, enabling semi-autonomous behavior.

Ft – Fine-Tuning (Retrieval)

Fine-tuning embeds domain knowledge into model weights. It improves consistency for narrow domains but reduces flexibility and requires retraining.

Fw – Frameworks (Orchestration)

Frameworks provide the plumbing that coordinates prompts, memory, tools, error handling, and system state, connecting components across families into coherent workflows.

Rt – Red Teaming (Validation)

Red teaming involves adversarial testing to uncover vulnerabilities such as prompt injection, unauthorized actions, or data leakage. It is critical for high-stakes enterprise deployments.

Row 4: Emerging (Frontier Patterns)

Ma – Multi-Agent Systems (Reactive)

Multi-agent systems involve multiple specialized agents collaborating or competing. They promise greater scalability and specialization but introduce coordination and safety challenges.

Sy – Synthetic Data (Retrieval)

Synthetic data is AI-generated data used for training, evaluation, and edge-case coverage, improving system robustness where real data is scarce.

(Orchestration Gap)

There is currently no standardized emergent orchestration primitive at this maturity level, reflecting an open research gap.

In – Interpretability (Validation)

Interpretability tools explain model behavior and decisions, supporting debugging, compliance, and trust.

Th – Thinking Models (Models)

Thinking models allocate additional computation for explicit reasoning, such as chain-of-thought or tree-of-thought approaches, enabling deeper planning and problem-solving.

Example Architectures (Reactions)

RAG-Based Knowledge Assistant

Embeddings → Vector DB → RAG → Prompt → LLM → Guardrails

Agentic Loop

Agent ↔ Function Calls → External APIs → Framework

Enterprise Considerations

- Balance RAG and fine-tuning based on flexibility versus consistency.

- Use small models for scale and latency, large models for reasoning.

- Apply guardrails and red teaming before production deployment.

- Decouple components so they can scale independently.

Conclusion

The AI Periodic Table provides a systems-level mental model for designing, evaluating, and scaling agentic AI systems. By mapping architectures to standardized elements, teams gain clarity, safety, and predictability.

It encourages layered thinking: start with prompts and models, add memory and orchestration, then enforce validation to reach enterprise-grade AI.